Real or AI?

Experiment 7

Dear Esteemed Colleagues and Friends,

As our modern world becomes more integrated with our digital world, how do we discern between the authentic and the manufactured? The rate at which online technology changes can be dizzying for even the most technologically savvy of us. One such technology that is turning heads is AI generated imagery. You may have already seen AI generated images on your feeds. These images are unnervingly hyper-realistic, blurring the lines between truth and fiction. But what are the implications of this technology? Friends let’s not be afraid, let’s understand.

Yours Truly,

Mad Black Scientist

Experiment 7:

Observation:

OpenAI has released two major projects that have been gaining popularity online and off: GPT3 and DALLE. You may know GPT3 as ChatGPT, a chat bot AI that produces human like responses to your calculus problems, coding issues, writing prompts, and more. You probably have also seen DALLE images circling the internet, such as the pope in head-to-toe Balenciaga. Both of these projects are Large Language Models (LLMs), a kind of artificial intelligence that has been trained on vast quantities of text and image data. In the case of GPT3, it has been trained on the entire internet. Through three main algorithms (Word Embedding, Attention Mechanisms, and Transformers) the AI takes prompts and outputs human like responses. Similarly, DALL-E uses text inputs to output new images1. These algorithms are extremely complex and are conceptually considered a “black box”. Meaning it’s not entirely clear how and why the models arrive at a particular output2.

What is clear, is that the input directly determines the output. So the kinds of images, words, texts and prompts that are fed to the algorithms will drastically change its response. It should be of no surprise that the outputs of these algorithms can have some strong racist, sexist, or violent biases. The multitude of new stories showing that LLMs can be trained to output hate speech, violent imagery, and misinformation are very telling of the ability of this technology to be used for unintended harm3.

While groups like OpenAI have safeguards to protect their models from producing hate speech or violent imagery, there are still risks of the technology to boggle our sense of reality. For example, image generation AI can produce art in the style of any artist like this fake Picasso. It can also generate hyper-realistic images which can lead to false news being spread, such as the image of President Trump being arrested.

Question:

How do we tell the fake from the real?

Hypothesis:

We can’t.

Experiment:

There aren’t many tools developed to help us discern between AI generated images and real images. There are news networks working against misinformation, and artists speaking out about their work being used to feed AI models. However, the scale and speed at which AI can produce new images, texts, or code vastly outpaces anything a news-cycle or instagram post can combat.

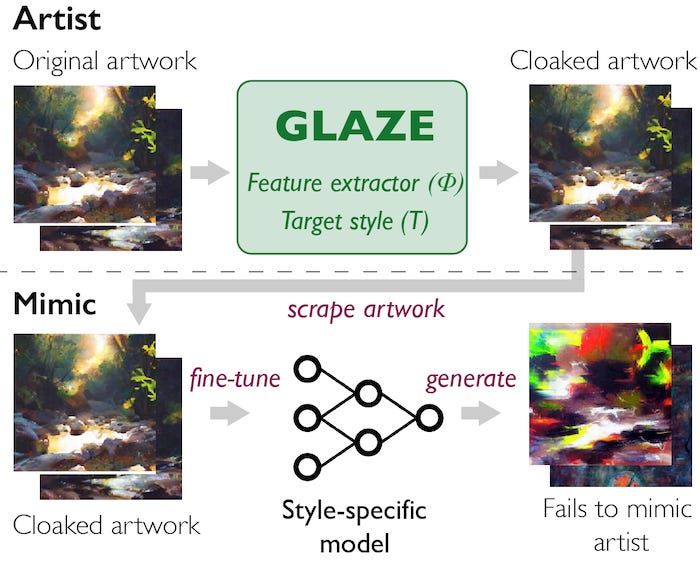

Yet, there is one tool that I find particularly interesting called Glaze. If you’re a visual artist, and you don’t want your style copied by an AI you should use Glaze.

The below experiment was pulled from the Glaze’s team research paper4:

To run this experiment follow these simple steps:

Download Glaze

Cloak your images or artwork

Try to use it as an input in DALLE mini (which is currently free)

Watch as DALLE cannot re-create or create variations of the image.

Repeat with an uncloaked image

Glaze adds a “cloak” to images you upload online so that AI models cannot re-generate another image in a similar style. Unlike the fake Picasso’s linked above, this cloaking only works on new artwork that hasn’t already been circulated on the internet. But imagine, this same concept being applied to your selfies, writing, or any content you have posted online.

The continued development of cloaking mechanisms would mean that AI models wouldn’t be able to use your content to generate anything like it again. It would allow you to control the inputs of AI models so that your art, writing, and face aren’t misused. Of course, as AI models advance these tools would have to advance as well.

Conclusion:

Just two weeks ago, thousands of AI technologists signed a letter to pause the development of AI for 6-months5. The letter asks some cautionary questions about the developing technology:

Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.

Such concern is warranted when rolling out technology with the power to harm and influence people on a mass scale. I look forward to more tools and policies developed over the next few months to ensure protections against these massive experiments.

Glossary:

GPT - Generative Pre-trained Transformer

Word Embedding - An algorithm used in LLMs to represent the meaning of words in a numerical form so that it can be fed to and processed by the AI model.

Attention Mechanisms - An algorithm used in LLMs that enables the AI to focus on specific parts of the input text, for example sentiment-related words of the text, when generating an output.

Transformers - A type of neural network architecture that is popular in LLM research that uses self-attention mechanisms to process input data.

(reference: What is a Large Language Model (LLM), Peter Foy )

References:

How DALLE-2 Works, Aditya Ramesh

What is a Large Language Model (LLM), Peter Foy